So here is is, late on a Saturday and I'm off in the morning to teach an Office 365 class. In a fit of overexcitement, I decided to try to build out the lab environment on my laptop before travelling to the class. The class is run as a long-day boot camp and in the past the start of the course has been challenging with issues relating to building out the environment. I hoped to avoid that this week!

This class is one of the first from Microsoft to utilise Azure. The student's "on premises" data centre is in Azure, and the course looks at adding Office 365 into the mix. It's a fantastic idea – the student just runs a few scripts and hey presto – their live environment (DC, Exchange, etc, etc, etc) is all built by script as part of the first lab. And amazingly – this just takes around two hours according to the lab manual. What could possibly go wrong?

Well – the last time, those two hours turned into 6 as we had some errors at the start with the first script not running properly. Of course, I was able to troubleshoot and fix the issues although it did take time. So I thought it might be a clever idea to re-test the scripts this time and maybe avoid the issues.

I downloaded the first VM and installed it onto my Windows 10 client, then started running the build scripts. All was going well, till I tried to run the script to create the DC. For several hours, each time I try to create the VM, i would get error: "CurrentStorageAccountName is not accessible. Ensure the current storage account is accessible and in the same location or affinity group as your cloud service." All the normal methods (i.e. looking at Google and reading the articles found) did not help. None of the suggested fixes were appropriate to me. After two hours I was stuck.

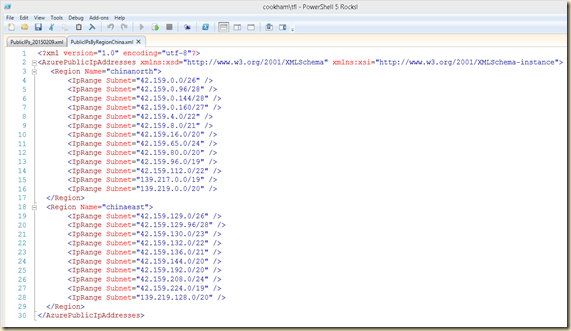

Then, by this time on the third page of Google results, I came across a trick that should have occurred to me earlier. Use the-Debug Switch on the call to New-AzureVM (the cmdlet that was failing). I was able to see the HTTP traffic which relates to the underlying REST management api leveraged by the Azure PowerShell cmdlets.

What I saw was the client asking for my storage account details – but claimed that the storage account did not exist. On close inspection, I could see the storage account name being requested was almost, but not quite, the correct ID. In creating the storage account, the script asked for user input (partner ID and student id), then created a storage account with a unique per/student name 0 a nice touch. In my case, I entered a partner number beginning with a 0 (zero). And looking closely at the script, part of it strips off the leading zero and used the zero-less storage account name for later operations – and of course that fails. To fix things, I just removed everything created thus far from Azure, and re-ran the scripts utilising better input and away I want.

There are two lessons here: first (as I tell all my students in almost every PowerShell Class): all user input is evil unless tested and proved to be to the contrary. If you are writing a script that accepts user input, code defensively and assume the user is going to enter duff data. The script should have checked to ensure the partner id entered did not start with a zero – it's a production script after all. Of course, I probably should have, and eventually did use a partner number not starting in Zero. So the underlying cause is user error. Still a good lesson is that, given a chance, most users can do dumb things and you need to write scripts accordingly.

The second one is the value of the –Debug switch when using any Azure PowerShell cmdlet. There can be quite a lot of output, but the ability to see what the cmdlet is doing can be invaluable. In my case, it took only seconds to work out the problem once I'd seen the debug output from the call to New-AzureVM. I recommend you play with this, as you get more familiar with Azure PowerShell – it can certainly help to in troubleshooting other people's scripts!